There’s no shortage of excitement around AI in education. Generative AI tools, like ChatGPT, have made their way into classrooms and seem to offer endless possibilities for helping students (and teachers) with everything from research to problem-solving. But we need to be careful, AI can either support or hinder learning, it all depends on how it’s used.

A recent research study, “Generative AI Can Harm Learning,” takes a hard look at this, revealing how AI can impede learning if not thoughtfully implemented. You can read the full research here, but in this post, rather than focus on a clickbait title on a research paper, I want to focus on what we can learn from it—and more importantly, how we can ensure AI enhances learning, not undermines it.

It’s important to note that in this study, they didn’t use ChatGPT exactly as you or I might. Instead, they developed two AI models based on GPT-4, one resembling the functionality of ChatGPT (called GPT Base) and another specifically designed to support learning (GPT Tutor). The differences between these two models provide valuable insights into how we can, and should, be using AI in education.

ChatGPT vs. GPT Base vs. GPT Tutor: What’s the Difference?

The study compared two AI models, GPT Base and GPT Tutor, both built using GPT-4 technology, but functioning differently.

GPT Base was similar to how you or I might use ChatGPT; giving direct answers to questions. GPT Tutor, however, acted more like a Socratic tutor, offering incremental guidance without directly giving away the answers. This method is vital because it pushes students to think critically and engage with the material, rather than passively accepting answers.

Here’s a comparison of how each system worked and their impact on student learning:

| Feature | GPT Base (ChatGPT-like) | GPT Tutor (Socratic-like) | Standard ChatGPT |

| Mode of Assistance | Provides direct answers to questions. | Offers incremental hints and guides problem-solving. | Provides answers, often detailed and direct. |

| Student Engagement | Passive learning—students relied on AI for answers. | Active learning—students had to engage with the material. | Passive learning unless guided otherwise. |

| Impact on Short-term Learning | 48% improvement in practice tasks. | 127% improvement in practice tasks. | Variable—depends on use cases and prompts. |

| Impact on Long-term Learning | 17% drop in performance once AI was removed. | No significant drop—students retained problem-solving skills. | Similar risks as GPT Base if over-relied upon. |

| Common Use Case | Finishing tasks quickly, often without deep engagement. | Developing problem-solving skills over time. | Generalised tool for broad use cases. |

What This Teaches Us About AI and Tutoring

The table above highlights the stark differences in how these AI models influence learning. The key takeaway is that GPT Base, much like ChatGPT in its standard form, can provide quick answers but risks turning into a crutch if students use it as a shortcut. GPT Tutor, by contrast, is structured to mimic the guidance of a good tutor, encouraging deeper engagement and problem-solving. This is critical because we don’t want AI to simply help students complete tasks, we want it to enhance their understanding and boost their independent learning skills.

This approach echoes what we know about good tutoring practices, and more specifically, the Socratic method of asking guiding questions rather than giving answers outright.

The Importance of Thoughtful AI Implementation

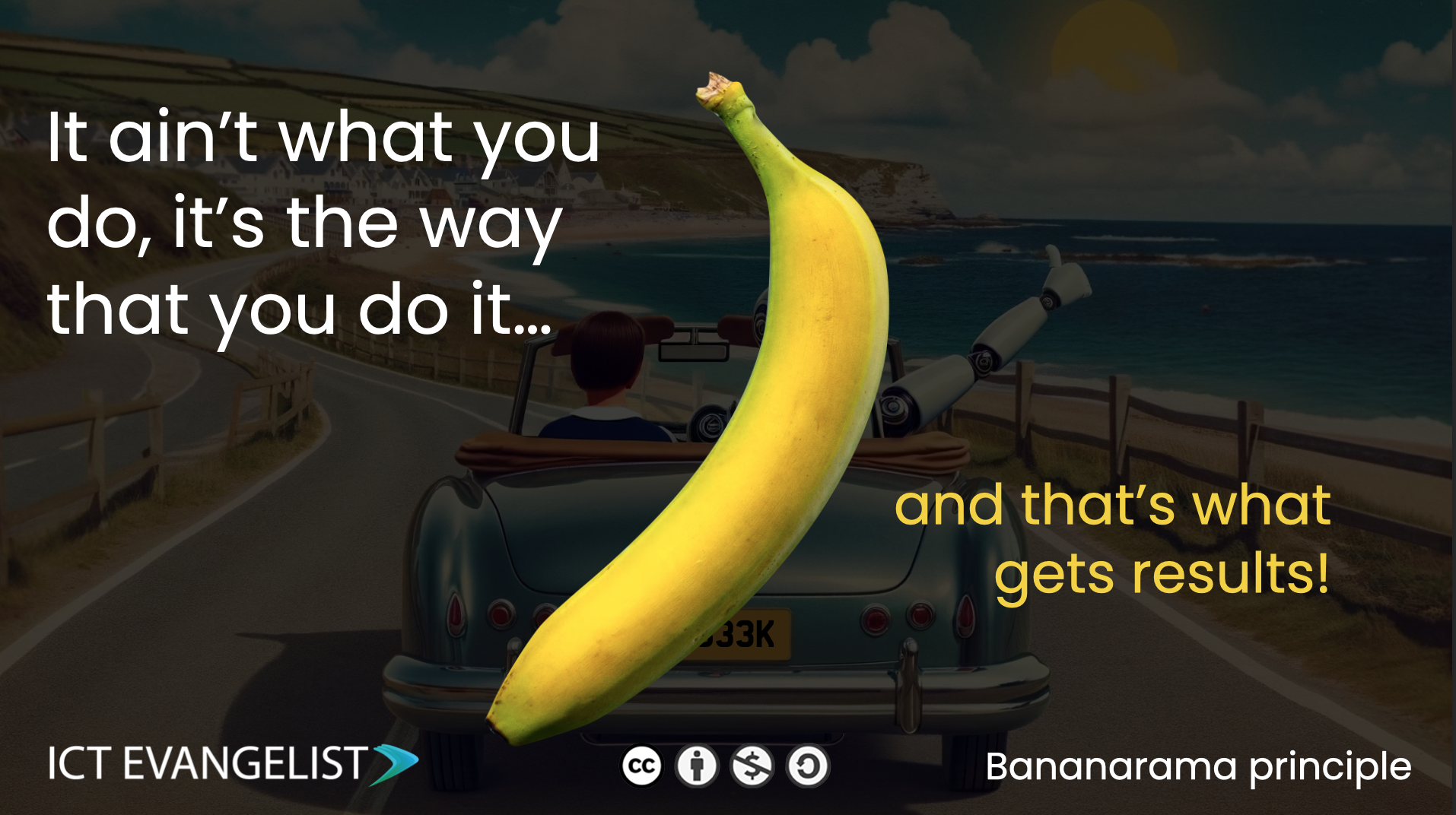

As I’ve said many times before with my Bananarama principle: It Ain’t [so much] What You Do, It’s The Way That You Do It (and that’s what gets results).

This principle applies perfectly to how we approach AI in education. The research shows that when AI tools are used thoughtlessly, like GPT Base, they may give short-term results but fail to promote long-term learning. We can’t afford to treat AI as just another tech trend, it needs to be implemented strategically.

It reminds me of the debate around Bloom’s 2 Sigma Problem, which suggested that one-on-one tutoring could raise student performance by two standard deviations. While some aspects of this idea have been debunked (Paul von Hippel covers this well in his critique), the core message remains: Good tutors don’t just give answers, they guide students to think critically and solve problems. This is exactly how AI needs to be positioned if it’s going to have a positive impact in education.

Building a Strong Digital Strategy

As I’ve long advocated for, and supported many schools with, the key to making AI work in education is having a clear digital strategy. It’s not enough to just put AI tools like ChatGPT into students’ hands and hope for the best. Schools need a thoughtful, structured approach that aligns with their educational goals and ensures that AI is supporting deeper learning, not undermining it.

I’ve been helping schools across the world develop effective strategies through my Digital Cognition framework, which focuses on integrating technology like AI in ways that foster critical thinking and problem-solving. This framework helps schools build AI literacy into their curriculum, giving students and teachers the skills to use AI tools effectively and responsibly. It’s not just about using AI, it’s about understanding how it works, what it’s good at (and not so good at), and when to use it.

And for those just getting started, don’t forget you can download the Guide to Creating a Digital Strategy in Education, which I co-authored with Al Kingsley, at schooldigitalstrategy.com.

This guide is designed to help schools navigate the challenges of integrating technology in a way that enhances learning for all students.

AI Literacy: Essential for Teachers and Students

We’re at a point where AI literacy is just as important as traditional literacy. Both students and teachers are increasingly using AI tools in the classroom, and it’s more important than ever that they know how to use them effectively. This isn’t just about knowing how to ask ChatGPT a question, it’s about understanding when and why to use AI, and recognising its strengths and weaknesses.

Through my consultancy work (and as a parent supporting my children), I’ve seen firsthand, how AI literacy can empower both teachers and students. It helps them develop a more sophisticated understanding of AI, turning them into active learners rather than passive users.

When integrated correctly, AI becomes a tool for engagement and deeper learning, rather than a shortcut for quick answers.

This extends to teachers, too. Schools need to support staff in using AI effectively, from lesson planning to classroom delivery. Tools like GPT Tutor show us that AI can be used to foster independent learning, but only when we have the right systems in place.

Teachers need the training and understanding to make the most of these tools, ensuring they complement their teaching rather than compete with it.

Making AI Work: A Call for Thoughtful Implementation

At the end of the day, AI can be a game-changer in education but only if we’re smart about how we use it. We’ve seen the potential pitfalls in many technologies over the years, not just when AI is used without careful consideration, but we’ve also seen how it can be transformational when built into a structured, well-thought-out digital strategy.

This is where schools need to be proactive. It’s not about whether AI will have a place in education, it already does. The question is how schools will embrace and manage this technology to ensure that it serves their long-term goals for student success?

Over the years, I’ve worked with schools across the UK and beyond, helping them develop digital strategies that integrate AI in a way that supports both teachers and students. Whether through INSET days, workshops, or consultancy, I’ve seen how the right strategy can transform a school’s approach to technology. If you’re looking for help with your school’s digital strategy, or you want to explore how to integrate AI responsibly, I’m here to support you.

You can contact me directly for advice or to book a session at ICTEvangelist.com/contact.

In Summary

The research is clear: AI can harm learning if we’re not careful. But with the right approach, it can also be a game-changer. By focusing on AI literacy and creating thoughtful strategies for how we use these tools, we can ensure that AI enhances learning and empowers students to think critically and solve problems on their own.

Remember the Bananarama principle: AI isn’t a magic bullet, but with the right strategy, it can make a real difference to learning, teaching, and workload reduction.